Discover more from AI Impacts blog

Superintelligence Is Not Omniscience

Jeffrey Heninger and Aysja Johnson, 7 April 2023

The Power of Intelligence

It is often implicitly assumed that the power of a superintelligence will be practically unbounded. There seems like there could be "ample headroom" above humans, i.e. that a superintelligence will be able to vastly outperform us across virtually all domains.

By "superintelligence," I mean something which has arbitrarily high cognitive ability, or an arbitrarily large amount of compute, memory, bandwidth, etc., but which is bound by the physical laws of our universe.1 There are other notions of "superintelligence" which are weaker than this. Limitations of the abilities of this superintelligence would also apply to anything less intelligent.

There are some reasons to believe this assumption. For one, it seems a bit suspicious to assume that humans have close to the maximal possible intelligence. Secondly, AI systems already outperform us in some tasks,2 so why not suspect that they will be able to outperform us in almost all of them? Finally, there is a more fundamental notion about the predictability of the world, described most famously by Laplace in 1814:

Given for one instant an intelligence which could comprehend all the forces by which nature is animated and the respective situation of the beings who compose it - an intelligence sufficiently vast to submit this data to analysis - it would embrace in the same formula the movements of the greatest bodies of the universe and those of the lightest atom; for it, nothing would be uncertain and the future, as the past, would be present in its eyes.3

We are very far from completely understanding, and being able to manipulate, everything we care about. But if the world is as predictable as Laplace suggests, then we should expect that a sufficiently intelligent agent would be able to take advantage of that regularity and use it to excel at any domain.

This investigation questions that assumption. Is it actually the case that a superintelligence has practically unbounded intelligence, or are there "ceilings" on what intelligence is capable of? To foreshadow a bit, there are ceilings in some domains that we care about, for instance, in predictions about the behavior of the human brain. Even unbounded cognitive ability does not imply unbounded skill when interacting with the world. For this investigation, I focus on cognitive skills, especially predicting the future. This seems like a realm where a superintelligence would have an unusually large advantage (compared to e.g. skills requiring dexterity), so restrictions on its skill here are more surprising.

There are two ways for there to be only a small amount of headroom above human intelligence. The first is that the task is so easy that humans can do it almost perfectly, like playing tic-tac-toe. The second is that the task is so hard that there is a "low ceiling": even a superintelligence is incapable of being very good at it. This investigation focuses on the second.

There are undoubtedly many tasks where there is still ample headroom above humans. But there are also some tasks for which we can prove that there is a low ceiling. These tasks provide some limitations on what is possible, even with arbitrarily high intelligence.

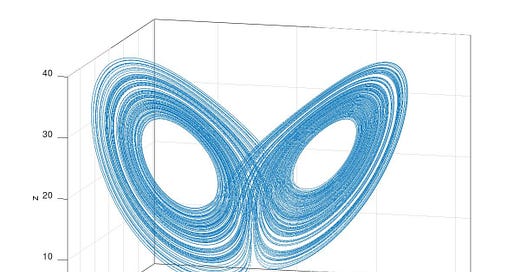

Chaos Theory

The main tool used in this investigation is chaos theory. Chaotic systems are things for which uncertainty grows exponentially in time. Most of the information measured initially is lost after a finite amount of time, so reliable predictions about its future behavior are impossible.

A classic example of chaos is the weather. Weather is fairly predictable for a few days. Large simulations of the atmosphere have gotten consistently better for these short-time predictions.4

After about 10 days, these simulations become useless. The predictions from the simulations are worse than guessing what the weather might be using historical climate data from that location.

Chaos theory provides a response to Laplace. Even if it were possible to exactly predict the future given exact initial conditions and equations of motion,5 chaos makes it impossible to approximately predict the future using approximate initial conditions and equations of motion. Reliable predictions can only be made for a short period of time, but not once the uncertainty has grown large enough.

There is always some small uncertainty. Normally, we do not care: approximations are good enough. But when there is chaos, the small uncertainties matter. There are many ways small uncertainties can arise: Every measuring device has a finite precision.6 Every theory should only be trusted in the regimes where it has been tested. Every algorithm for evaluating the solution has some numerical error. There are external forces you are not considering that the system is not fully isolated from. At small enough scales, thermal noise and quantum effects provide their own uncertainties. Some of this uncertainty could be reduced, allowing reliable predictions to be made for a bit longer.7 Other sources of this uncertainty cannot be reduced. Once these microscopic uncertainties have grown to a macroscopic scale, the motion of the chaos is inherently unpredictable.

Completely eliminating the uncertainty would require making measurements with perfect precision, which does not seem to be possible in our universe. We can prove that fundamental sources of uncertainty make it impossible to know important things about the future, even with arbitrarily high intelligence. Atomic scale uncertainty, which is guaranteed to exist by Heisenberg’s Uncertainty Principle, can make macroscopic motion unpredictable in a surprisingly short amount of time. Superintelligence is not omniscience.

Chaos theory thus allows us to rigorously show that there are ceilings on some particular abilities. If we can prove that a system is chaotic, then we can conclude that the system offers diminishing returns to intelligence. Most predictions of the future of a chaotic system are impossible to make reliably. Without the ability to make better predictions, and plan on the basis of these predictions, intelligence becomes much less useful.

This does not mean that intelligence becomes useless, or that there is nothing about chaos which can be reliably predicted.

For relatively simple chaotic systems, even when what in particular will happen is unpredictable, it is possible to reliably predict the statistics of the motion.8 We have learned sophisticated ways of predicting the statistics of chaotic motion,9 and a superintelligence could be better at this than we are. It is also relatively easy to sample from this distribution to emulate behavior which is qualitatively similar to the motion of the original chaotic system.

But chaos can also be more complicated than this. The chaos might be non-stationary, which means that the statistical distribution and qualitative description of the motion themselves change unpredictably in time. The chaos might be multistable, which means that it can do statistically and qualitatively different things depending on how it starts. In these cases, it is also impossible to reliably predict the statistics of the motion, or to emulate a typical example of a distribution which is itself changing chaotically. Even in these cases, there are sometimes still patterns in the chaos which allow a few predictions to be made, like the energy spectra of fluids.10 These patterns are hard to find, and it is possible that a superintelligence could find patterns that we have missed. But it is not possible for the superintelligence to recover the vast amount of information rendered unpredictable by the chaos.

This Investigation

This blog post is the introduction to an investigation which explores these points in more detail. I will describe what chaos is, how humanity has learned to deal with chaos, and where chaos appears in things we care about - including in the human brain itself. Links to the other pages, blog posts, and report that constitute this investigation can be found below.

Most of the systems we care about are considerably messier than the simple examples we use to explain chaos. It is more difficult to prove claims about the inherent unpredictability of these systems, although it is still possible to make some arguments about how chaos affects them.

For example, I will show that individual neurons, small networks of neurons, and in vivo neurons in sense organs can behave chaotically.11 Each of these can also behave non-chaotically in other circumstances. But we are more interested in the human brain as a whole. Is the brain mostly chaotic or mostly non-chaotic? Does the chaos in the brain amplify uncertainty all the way from the atomic scale to the macroscopic, or is the chain of amplifying uncertainty broken at some non-chaotic mesoscale? How does chaos in the brain actually impact human behavior? Are there some things that brains do for which chaos is essential?

These are hard questions to answer, and they are, at least in part, currently unsolved. They are worth investigating nevertheless. For instance, it seems likely to me that the chaos in the brain does render some important aspects of human behavior inherently unpredictable and plausible that chaotic amplification of atomic-level uncertainty is essential for some of the things humans are capable of doing.

This has implications for how humans might interact with a superintelligence and for how difficult it might be to build artificial general intelligence.

If some aspects of human behavior are inherently unpredictable, that might make it harder for a superintelligence to manipulate us. Manipulation is easier if it is possible to predict how a human will respond to anything you show or say to them. If even a superintelligence cannot predict how a human will respond in some circumstances, then it is harder for the superintelligence to hack the human and gain precise, long-term control over them.

So far, I have been considering the possibility that a superintelligence will exist and asking what limitations there are on its abilities.12 But chaos theory might also change our estimates of the difficulty of making artificial general intelligence (AGI) that leads to superintelligence. Chaos in the brain makes whole brain emulation on a classical computer wildly more difficult - or perhaps even impossible.

When making a model of a brain, you want to coarse-grain it at some scale, perhaps at the scale of individual neurons. The coarse-grained model of a neuron should be much simpler than a real neuron, involving only a few variables, while still being good enough to capture the behavior relevant for the larger scale motion. If a neuron is behaving chaotically itself, especially if it is non-stationary or multistable, then no good enough coarse-grained model will exist. The neuron needs to be resolved at a finer scale, perhaps at the scale of proteins. If a protein itself amplifies smaller uncertainties, then you would have to resolve it at a finer scale, which might require a quantum mechanical calculation of atomic behavior.

Whole brain emulation provides an upper bound on the difficulty of AGI. If this upper bound ends up being farther away than you expected, then that suggests that there should be more probability mass associated with AGI being extremely hard.

Links

I will explore these arguments, and others, in the remainder of this investigation. Currently, this investigation consists of one report, two Wiki pages, and three blog posts.

Report:

Chaos and Intrinsic Unpredictability. Background reading for the investigation. An explanation of what chaos is, some other ways something can be intrinsically unpredictable, different varieties of chaos, and how humanity has learned to deal with chaos.

Wiki Pages:

Chaos in Humans. Some of the most interesting things to try to predict are other humans. I discuss whether humans are chaotic, from the scale of a single neuron to society as a whole.

AI Safety Arguments Affected by Chaos. A list of the arguments I have seen within the AI safety community which our understanding of chaos might affect.

Blog Posts:

Superintelligence Is Not Omniscience. This post.

You Can’t Predict a Game of Pinball. A simple and familiar example which I describe in detail to help build intuition for the rest of the investigation.

Whole Bird Emulation Requires Quantum Mechanics. A humorous discussion of one example of a quantum mechanical effect being relevant for an animal’s behavior.

Other Resources

If you want to learn more about chaos theory in general, outside of this investigation, here are some sources that I endorse:

Undergraduate Level Textbook:

S. Strogatz. Nonlinear Dynamics And Chaos: With Applications To Physics, Biology, Chemistry, and Engineering. (CRC Press, 2000).

Graduate Level Textbook:

P. Cvitanović, R. Artuso, R. Mainieri, G. Tanner and G. Vattay, Chaos: Classical and Quantum. ChaosBook.org. (Niels Bohr Institute, Copenhagen 2020).

Wikipedia has a good introductory article on chaos. Scholarpedia also has multiple good articles, although no one obvious place to start.

What is Chaos? sequence of blog posts by The Chaostician.

Notes

In this post, "we" refers to humanity, while "I" refers to the authors: Jeffrey Heninger and Aysja Johnson.

The quote continues: "The human mind offers, in the perfection which it has been able to give to astronomy, a feeble idea of this intelligence. Its discoveries in mechanics and geometry, added to that of universal gravity, have enabled it to comprehend in the same analytic expressions the past and future states of the system of the world. Applying the same method to some other objects of its knowledge, it has succeeded in referring to general laws observed phenomena and in foreseeing those which given circumstances ought to produce. All these efforts in the search for truth tend to lead it back continually to the vast intelligence which we have just mentioned, but from which it will always remain infinitely removed. This tendency, peculiar to the human race, is that which renders it superior to animals; and their progress in this respect distinguishes nations and ages and constitutes their true glory."

Laplace. Philosophical Essay on Probabilities. (1814) p. 4. https://en.wikisource.org/wiki/A_Philosophical_Essay_on_Probabilities.

Interestingly, the trend appears linear. My guess is that the linear trend is a combination of exponentially more compute being used and the problem getting exponentially harder.

Nate Silver. The Signal and the Noise. (2012) p. 126-132.

Whether or not this statement of determinism is true is a perennial debate among scholars. I will not go into it here.

The most precise measurement ever is of the magnetic moment of the electron, with 9 significant digits.

NIST Reference on Constants, Units, and Uncertainty. https://physics.nist.gov/cgi-bin/cuu/Value?muem.

Because the uncertainty grows exponentially with time, if you try to make longer-term predictions by reducing the initial uncertainty, you will only get logarithmic returns.

If the statistics are predictable, this can allow us to make a coarse-grained model for the behavior at a larger scale which is not affected by the uncertainties amplified by the chaos.

Described in the report Chaos and Intrinsic Unpredictability.

Also described in Chaos and Intrinsic Unpredictability.

The evidence for this can be found in Chaos in Humans.

This possibility probably takes up too much of our thinking, even prior to these arguments.

Wulfson. The tyranny of the god scenario. AI Impacts. (2018) https://aiimpacts.org/the-tyranny-of-the-god-scenario/.

Subscribe to AI Impacts blog

A blog from AI Impacts about the future of artificial intelligence