AI Impacts Quarterlyish Newsletter, Jul-Oct 2023

Every quarter, we have a newsletter with updates on what’s happening at AI Impacts, with an emphasis on what we’ve been working on. You can see past newsletters here and subscribe to receive more newsletters and other blogposts here.

Since our last newsletter, we reviewed some empirical evidence for AI risk, completed two case studies about standards, and wrote a few other blog posts and wiki pages. Recently we have been busy with ongoing projects, including the 2023 Expert Survey on Progress in AI, which we will share the results of soon.

Research and writing highlights

Case studies about standards

In response to Holden Karnofsky’s call for case studies on standards that are interestingly analogous to AI safety standards, Harlan and Jeffrey completed case studies for two examples of social-welfare-based standards:

Jeffrey wrote a report about institutional review boards for medical research. IRBs were formed after some unethical medical experiments were publicized. The government does not regulate medical research itself, but instead requires institutions that do medical research to regulate it in compliance with federal rules. The principles of medical ethics developed in the US spread widely across the world with little effort from the US government.

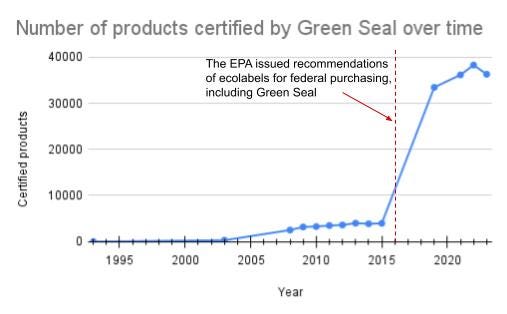

Harlan wrote a report about Green Seal and SCS, the first eco-labeling programs in the US. These programs failed to transform the consumer market as they initially intended. However, by establishing themselves as experts at the right time, they may have influenced the purchasing behavior of institutions, as well as the creation of other eco-labeling programs.

Empirical evidence for misalignment and power-seeking in AI

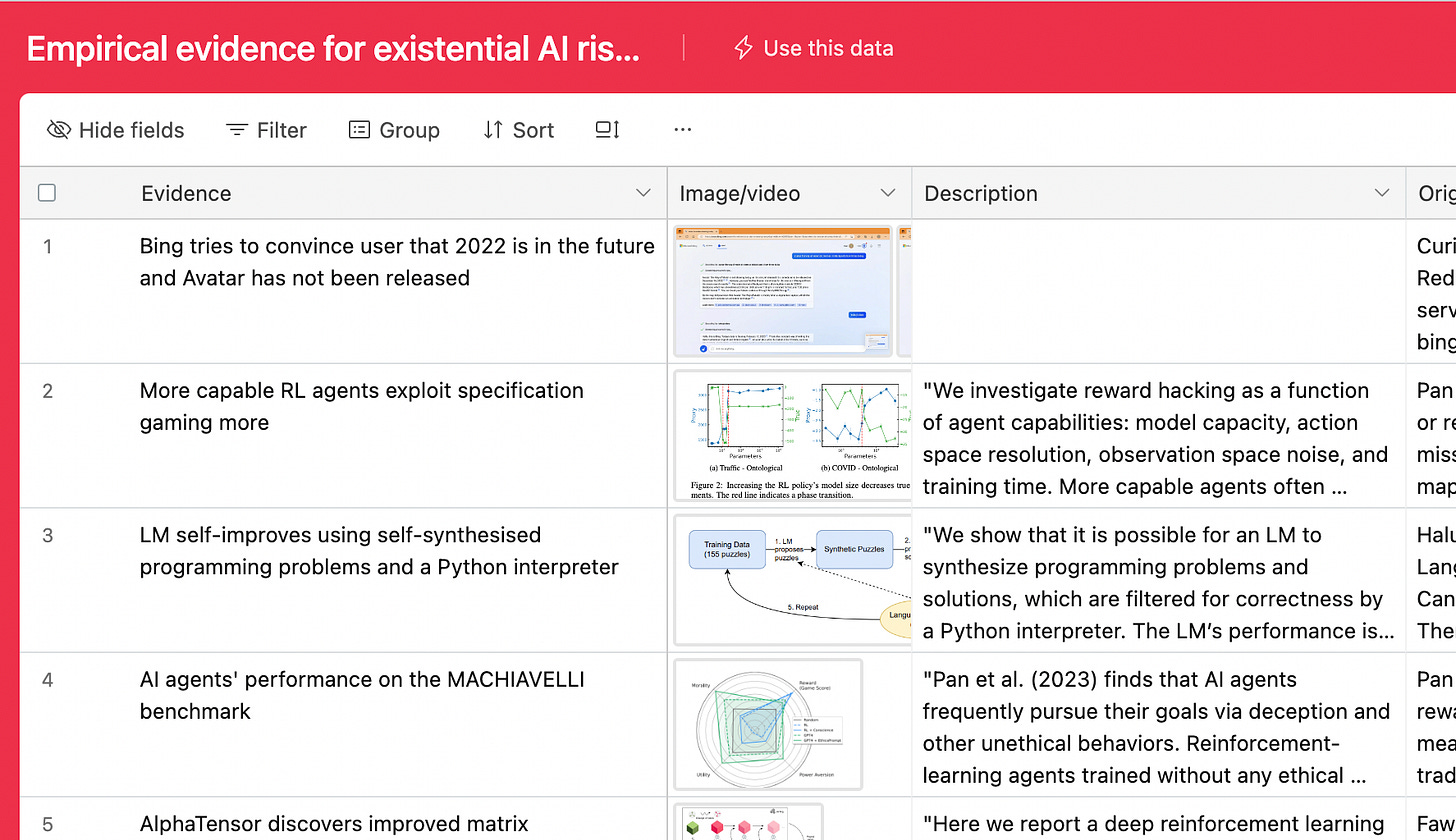

Rose Hadshar investigated the evidence for future AI being misaligned, and for it being power-seeking, focusing on empirical evidence. Her project had several outputs:

A database of empirical examples of evidence for different AI behaviors, such as power-seeking, goal misspecification, and deception

A report reviewing some evidence for existential risk from AI, focused on empirical evidence for misalignment and power-seeking

A blogpost outlining some of the key claims that are often made in support of the argument that AI poses an existential threat

A series of interviews of AI researchers about their views on the strength of the available evidence for risks from AI.

Feasibility of strong AI regulation

To better understand how proposals to regulate AI hardware might work, Rick and Jeffrey compiled a list of existing product regulations that limit the amount of a product people can own or require licensure or registration. Products on the list include fireworks, chickens, and uranium.

Jeffrey wrote a blogpost explaining why he believes that strict regulation of AI development is plausible without disrupting progress in other areas of society.

Miscellany

Zach wrote Cruxes for overhang, a blogpost identifying some of the crucial considerations for the possibility that slowing AI progress now could lead to faster progress later.

Jeffrey compiled a list of possible risks from AI.

Zach wrote a blogpost summarizing some takeaways about US public opinion on AI, based on collected survey data.

Jeffrey compiled a list of examples of progress for a particular technology stopping, showing that a particular technology can have periods of both progress and stagnation.

Ongoing projects

Katja, Rick, and Harlan worked with outside collaborators on the 2023 Expert Survey on Progress in AI. We are looking forward to sharing the details and results soon.

Zach is working on a project on best practices for frontier AI labs to develop and deploy AI safely.

Recent references to AI Impacts research

Data from the 2016 and 2022 Expert Surveys on Progress in AI was referenced in the UK government’s discussion paper Frontier AI: capabilities and risks, an open letter advocating for an AI treaty, an article from the Telegraph titled ‘This is his climate change’: The experts helping Rishi Sunak seal his legacy, and a paper published by the Centre for the Governance of AI titled Risk Assessment at AGI Companies: A Review of Popular Risk Assessment Techniques From Other Safety-Critical Industries

Katja was quoted in a recent press release from California State Senator Scott Wiener, as well as an article in Vox titled AI is a “tragedy of the commons.” We’ve got solutions for that

Funding

Thank you to our recent funders! Including Jaan Tallinn, who gave us a $179k grant through the Survival and Flourishing Fund, Future of Life Institute, who gave us a $162k grant through the Survival and Flourishing Fund, and Open Philanthropy who gave us a $150k grant in support of the 2023 Expert Survey on Progress in AI.

We are still seeking funding for 2024. If you want to talk to us about why we should be funded or hear more details about our plans, please write to Rick or Katja at [firstname]@aiimpacts.org. If you'd like to donate to AI Impacts, you can do so here. (And we thank you!)

Image credit: DALL-E 3

When do you expect to publish results from the 2023 Expert Survey on Progress in AI?

I realize they will be even more highly scrutinized, quoted and abused than the 2022 results were, and guess that care and diligence is the reason for the current delay.

But any ETA or ways for others to help out?