A mapping of claims about AI risk

Many people have argued that AI poses an existential risk, using lots of different terms.

This spreadsheet maps some of these claims to one another. Hopefully this makes it easier to navigate arguments about existential risk from AI.

Some notes and caveats:

The spreadsheet isn’t comprehensive. Many others have argued that AI poses an existential risk.

The mappings are not exact, and should be read as closely related concepts rather than as identical terms.

The sources are not all of the same type. Some are papers, some are blog posts, some are shared google docs.

The type signature of the claims made in the sources varies. For instance, in some cases I cite a step in a step-by-step argument, and in others I cite an item in a list of AI safety problems.

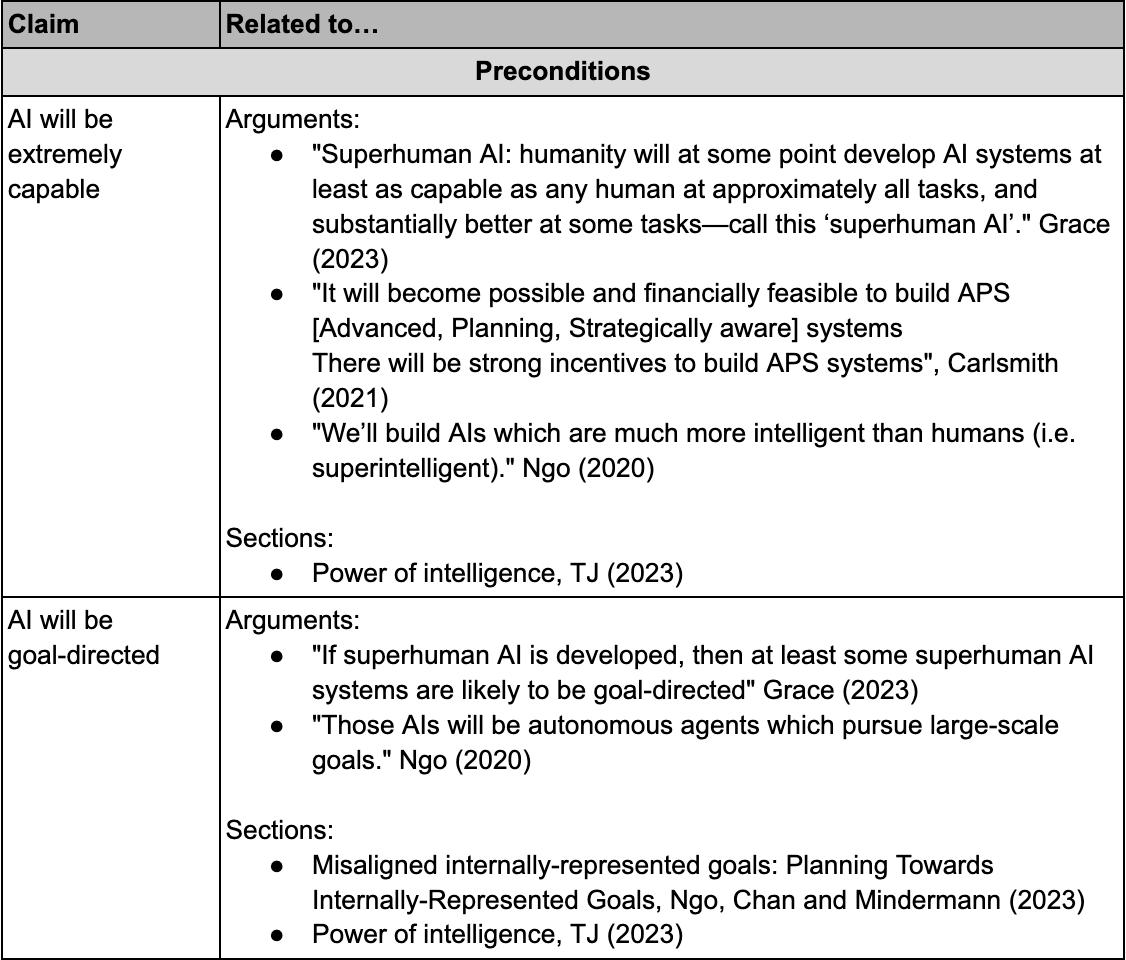

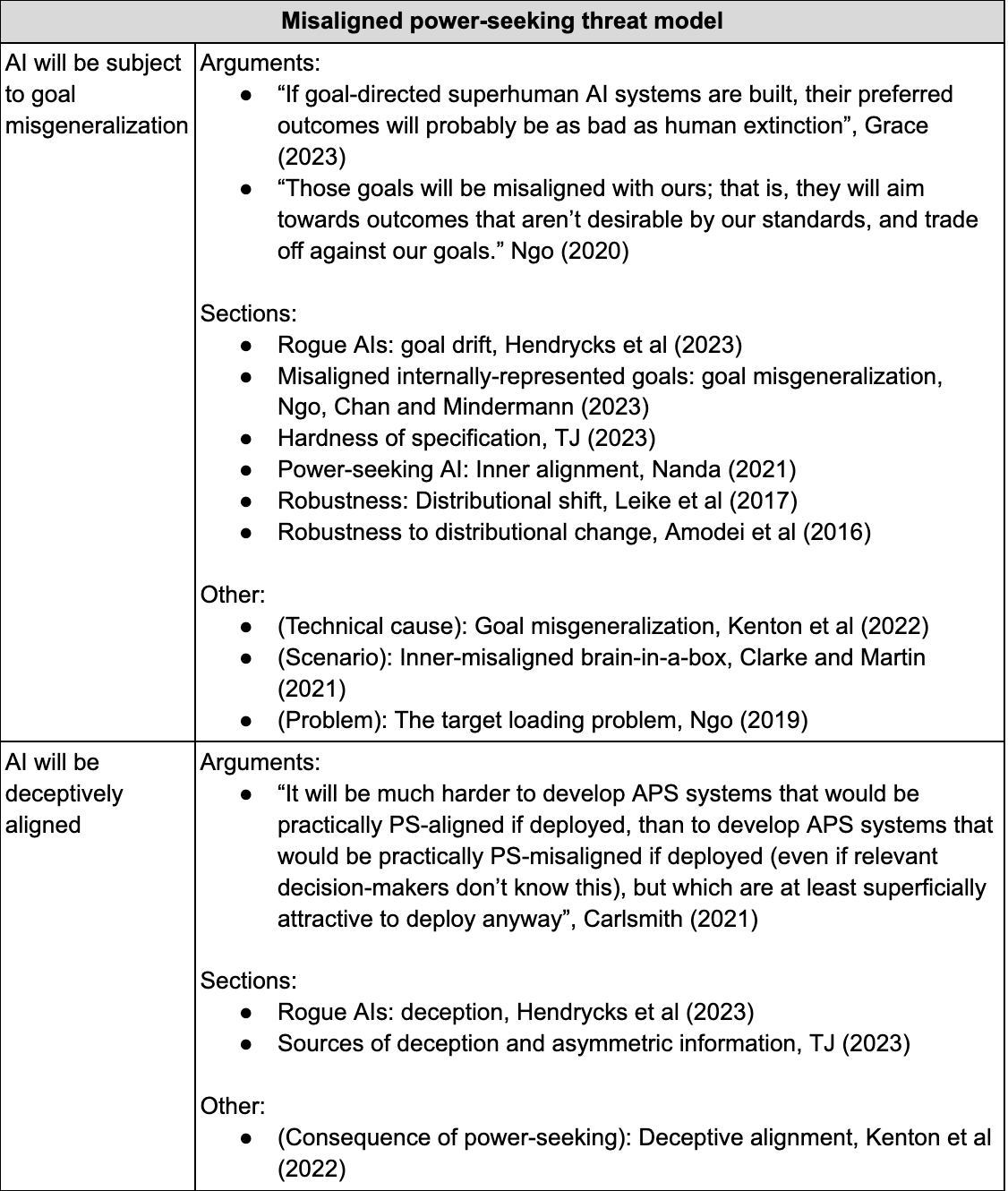

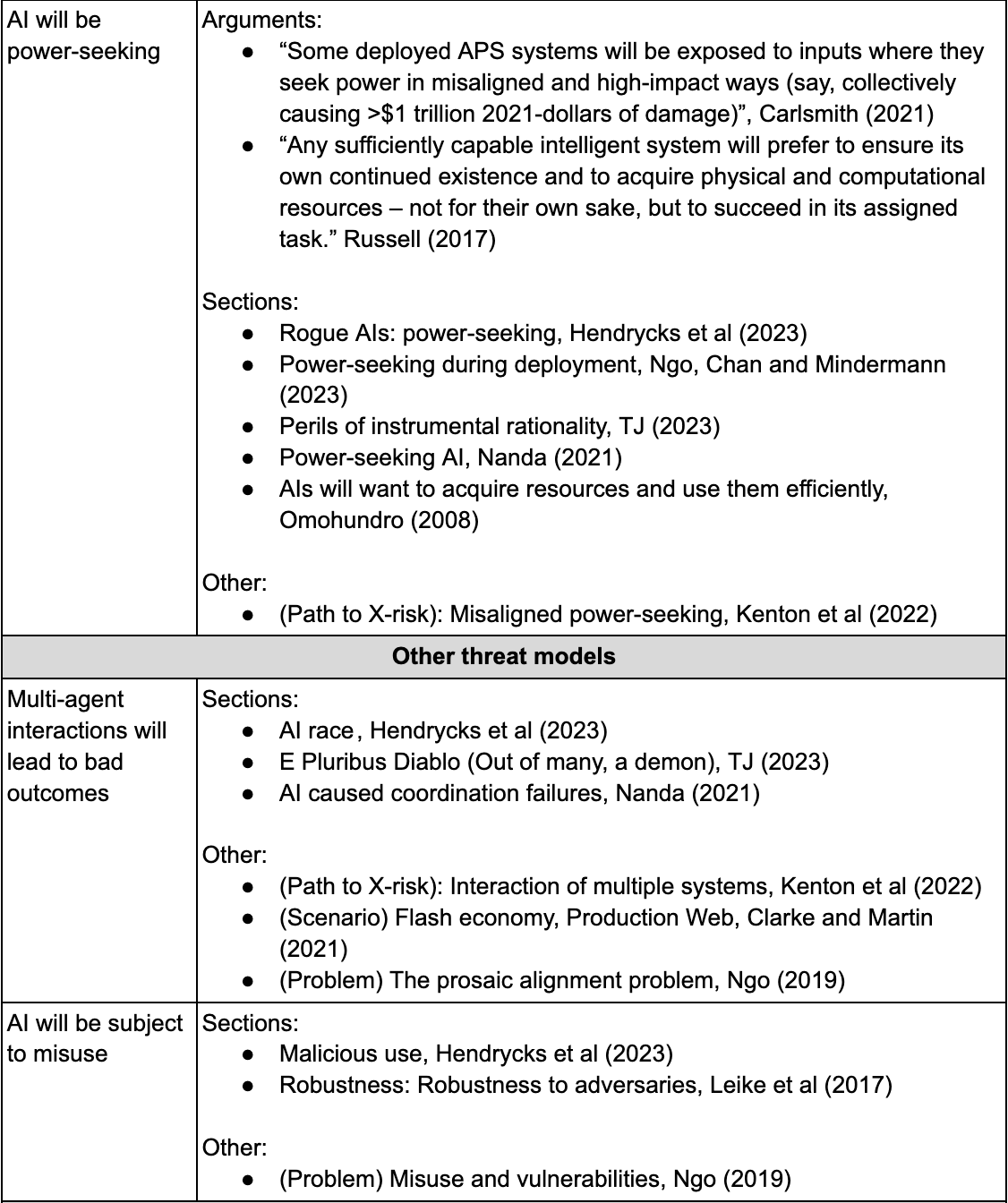

Here is a summary version of the spreadsheet in a table, for readers who prefer this format:

Sources cited in this post

Grace, Argument for AI x-risk from competent malign agents (2023)

Hendrycks et al, An Overview of Catastrophic AI Risks (2023)

Ngo, Chan and Mindermann, The Alignment Problem from a Deep Learning Perspective (2023)

TJ, Key Phenomena in AI RIsk (2023)

Kenton et al, Clarifying AI X-risk (2022)

Carlsmith, Is Power-Seeking AI an Existential Risk? (2021)

Clarke and Martin, Distinguishing AI takeover scenarios (2021)

Nanda, My Overview of the AI Alignment Landscape: Threat Models (2021)

Ngo, AGI Safety From First Principles (2020)

Ngo, Disentangling arguments for the importance of AI safety (2019)

Leike et al, AI safety gridworlds (2017)

Russell, Stuart Russell's description of AI risk (2017)

Amodei et al, Concrete problems in AI safety (2016)

Omohundro, The Basic AI Drives (2008)

Thumbnail image created by Bing Chat.