When scientists consider whether their research will end the world

Five examples and what we can take away from them

Although there is currently no consensus about the future impact of AI, many experts are warning that the technology’s continued progress could lead to catastrophic outcomes such as human extinction. Interestingly, some experts who give this warning are those who work or previously worked at the labs actively developing frontier AI systems. This unusual situation raises questions that humanity has little experience with. If a scientific project might harm the entire world, who should decide whether the project will proceed? How much risk should decision-makers accept for outcomes such as human extinction?

While it is unusual for experts to consider whether their research will lead to catastrophic outcomes, it is also not completely unprecedented. Below, I will discuss the few relevant historical examples I could find, followed by some possible takeaways.

Examples

1942-1945: Manhattan Project scientists considered whether a single atom bomb would set the entire planet on fire.

During the Manhattan Project, some of the scientists became concerned that the atomic bomb they were building might generate enough heat to set off a chain reaction that would ignite the atmosphere and quickly end all life on Earth. Unlike many risks from new technologies, this one could not be directly evaluated through experimentation because there would be no survivors to observe a negative outcome of the experiment. Fortunately, the relevant physics was understood well enough to take a decent shot at evaluating the risk with theory and calculation alone. And even more fortunately, the calculations showed that atmospheric ignition was very unlikely– or at least unlikely enough that the scientists proceeded to detonate the first atomic bomb for the Trinity test.

The historical accounts differ about exactly how small the scientists believed the risk to be and what amount of risk they deemed acceptable:

If, after calculation, [Arthur Compton] said, it were proved that the chances were more than approximately three in one million that the earth would be vaporized by the atomic explosion, he would not proceed with the project. Calculation proved the figures slightly less -- and the project continued.

-Pearl S. Buck, in 1959, recalled a conversation she had with Arthur Compton, the leader of the Metallurgical Laboratory during the Manhattan Project.

There was never “a probability of slightly less than three parts in a million,”... Ignition is not a matter of probabilities; it is simply impossible.

-Hans Bethe, the leader of the T Division during the Manhattan Project, in 1976

Arthur Compton never disputed Buck’s account,1 so Compton probably did decide that a 3 x 10-6 (three-in-a-million) chance of extinction was acceptable. Although Hans Bethe’s contrasting account shows some disagreement about the likelihood of the risk, the only documented attempt to decide an acceptable limit for the risk is Compton’s.

It’s hard to say whether it was reasonable for Compton to accept a 3x10-6 chance of atmospheric ignition. It’s a small number, in the same order of magnitude as one’s chance of dying from a single skydiving jump. But when it comes to setting the earth on fire, how small is small enough? One way to weigh the risk is by finding the expected number of deaths it equates to, but the answer varies depending on the assumptions used:

If the Manhattan Project scientists only gave moral value to humans who were alive in their time, then they could have multiplied the 1945 world population of ~2.3 billion by Compton’s threshold probability of 3x10-6 for an expected 7000 casualties.

As someone who lives “in the future” from the perspective of 1945, I would strongly prefer for them to have also placed moral value on humans living after their time. One analysis estimates that there could be between 1013 and 1054 future humans, so a 3x10-6 chance of extinction would be equivalent to somewhere between 30 million and 3x1048 casualties.

A three-in-a-million chance of human extinction is clearly a serious risk, but it may have been reasonable given how gravely important Compton and his colleagues believed the Manhattan Project to be. In his interview with Buck, Compton made it clear that he considered the stakes to be extremely high, saying that it would have been “better to accept the slavery of the Nazis than to run the chance of drawing the final curtain on mankind.”

Because of the secrecy demanded by the Manhattan Project, the public had no knowledge of the risk of atmospheric ignition until many years later. The assessment of the risk and the decision about how to handle it was likely made by a relatively small number of scientists and government officials.

1973-1975: Biologists considered whether recombinant DNA research would create deadly pathogens.

Methods for genetic recombination quickly progressed in the early 1970s, making it easier to combine the DNA of multiple organisms and causing some biologists to become concerned that their research could create deadly new pathogens. In 1972, Biochemist Paul Berg and his colleagues combined the DNA of a cancer-causing virus with the DNA of the E. Coli bacterium, which lives in the human gut. Berg planned to insert this recombinant DNA back into E. Coli bacteria but was convinced by his colleagues to halt the experiment over fears that the altered bacteria might get out of the lab and harm the world.

Prompted by these concerns, the National Academy of Sciences (NAS) formed a committee chaired by Berg to evaluate the risk. In 1974, the committee asked for a moratorium on certain types of recombinant DNA experiments. Despite disagreement among biologists, the moratorium was adhered to.

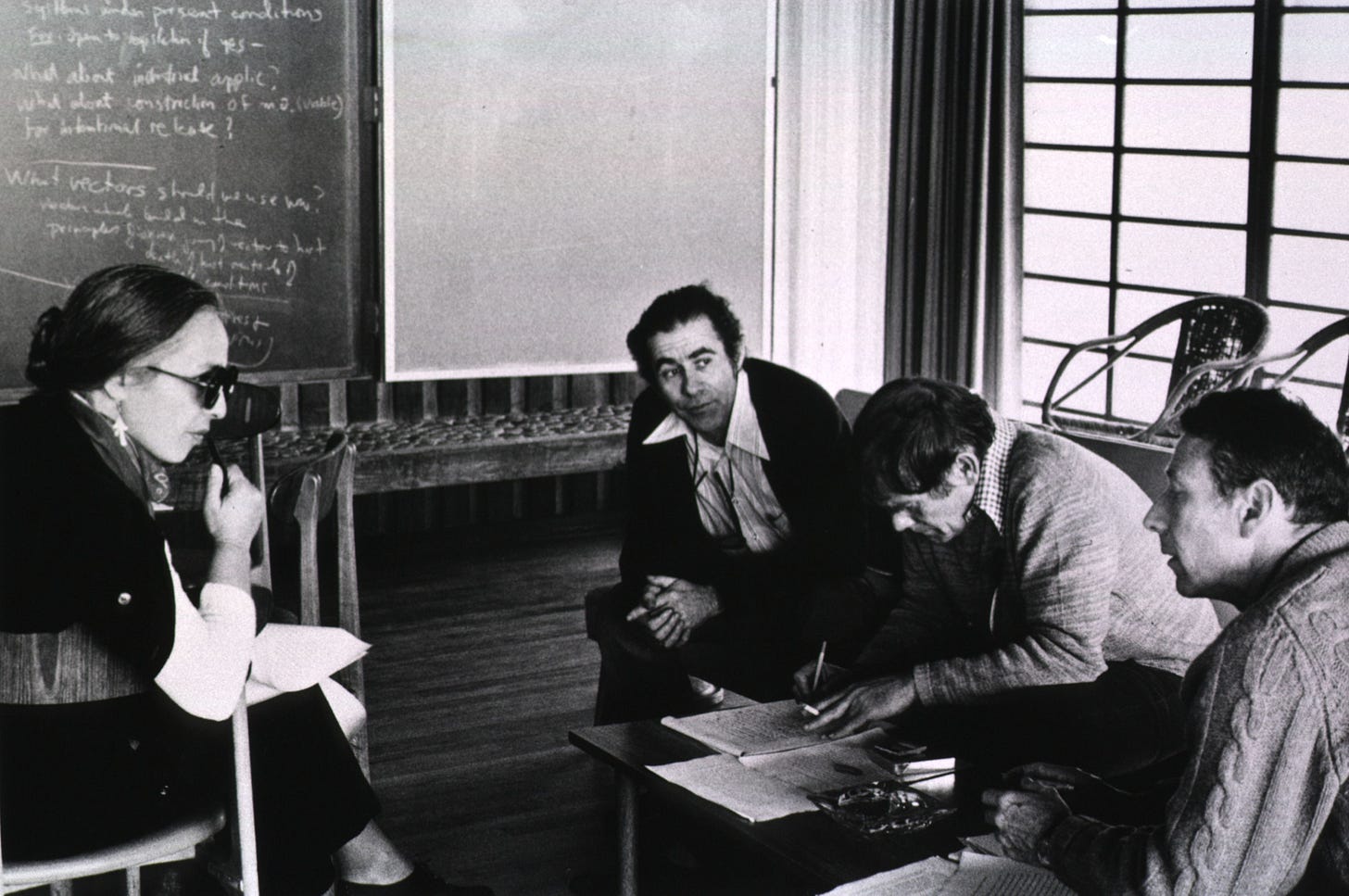

In 1975, scientists, lawyers, and policymakers met for the Asilomar Conference on Recombinant DNA Molecules to determine whether to lift the moratorium. Twelve journalists were also selected for invitation to the conference, possibly due to fears about cover-up accusations in the wake of the recent Watergate scandal. The conference ultimately concluded that most recombinant DNA research should be allowed to proceed under strict guidelines but that experiments involving highly pathogenic organisms or toxic genes should be forbidden. The guidelines were adopted by the National Institutes of Health as a requirement for funding.

Unlike the physicists at the Manhattan Project, biologists in the 1970s did not have the theoretical understanding to confidently evaluate the risk and build a consensus around it. It’s unclear how likely or severe they thought the risks from recombinant DNA were. In 2015, Berg said that “if you sampled our true feelings, the members of the committee believed the experiments probably had little or no risk, but … nobody could say zero risk.” In the decades following the conference, recombinant DNA experiments were mostly safe, and many of the guidelines were gradually scaled back over time. In 2007, Berg said that they “overestimated the risks, but [they] had no data as a basis for deciding, and it was sensible to choose the prudent approach.”

1999: Physicists considered whether heavy-ion collider experiments would destroy the planet.

In 1999, the year before the Relativistic Heavy Ion Collider (RHIC) began operating at Brookhaven National Laboratory (BNL), members of the public became concerned by media reports about a chance that the collider could create miniature black holes that would destroy the earth. In response to these concerns, the director of the BNL convened a committee of physicists to write a report addressing the risks.

The report, authored by Busza et al., uses a combination of theory and empirical evidence to demonstrate that the RHIC was very unlikely to cause a catastrophe. The report evaluates three types of speculated catastrophic risks from RHIC but mostly focuses on the risk of dangerous ‘strangelets.’2 In the report, the authors say that theoretical arguments related to strangelet formation are enough on their own to “exclude any safety problem at RHIC confidently.” They also make an empirical argument based on the observation that the moon has not been destroyed despite constant bombardment by cosmic rays. Based on that empirical evidence, they derive an upper bound for the probability that ranges from 10−5 (one in ten thousand) to 2×10−11 (two in one hundred billion) depending on assumptions made.

The authors of another report, authored by Dar et al. and published by CERN, used a similar empirical argument about the frequency of supernovae to calculate an upper bound of 2x10-8 (two in a hundred million) for the likelihood of dangerous strangelets being produced at RHIC.

What did these physicists believe about the limit of acceptability for risks of this severity? Dar et al. describe their bound of 2x10-8 as “a safe and stringent upper bound.” In the first version of their paper, Busza et al. described their bounds as “a comfortable margin of error,” but in the final version, they instead say that “We do not attempt to decide what is an acceptable upper limit on p, nor do we attempt a ‘risk analysis,’ weighing the probability of an adverse event against the severity of its consequences.”

The following year, physicist Adrian Kent released a paper criticizing Dar et al. and Busza et al. for a lack of nuance in their assessment of the risks. Kent points out that, even without considering future lives, Dar et al.’s “safe and stringent upper bound” of 10-8 would imply 120 casualties in expectation over the course of ten years. He says that “despite the benefits of RHIC, the experiment would not be allowed to proceed if it were certain (say, because of some radiation hazard) to cause precisely 120 deaths among the population at large.”

Kent uses a back-of-the-envelope calculation involving established risk tolerances for radiation hazards to propose that the acceptable upper bound for risk of extinction should be 10-15(one in a quadrillion) per year if not accounting for future lives and 10-22(one in ten billion trillion) per year if accounting for future lives.3 He also argues that the acceptable risk bounds for a project should be decided further in advance, and agreed on by experts that aren’t actively involved in the project.4

2005-?: The SETI community considers whether messages sent to space will invite the attention of hostile extraterrestrials.

The search for extraterrestrial intelligence (SETI) involves monitoring the cosmos for signals of extraterrestrial life. Around 2005, some members of the SETI community became enthusiastic about the idea of “Active SETI,” which refers to intentionally sending messages to the cosmos. This sparked an intense debate within the community- some were concerned that Active SETI could endanger humanity by attracting the attention of hostile extraterrestrials. A 2006 article in Nature argued that these were “small risks” that “should nevertheless be taken seriously.” Supporters of Active SETI argued that extraterrestrial civilizations could likely detect radio signals from Earth regardless of whether messages with more powerful signals were sent.

Science fiction author David Brin was one of the concerned figures in the SETI community. In 2006, Brin wrote that he and others in the community had called for a conference to discuss the risks of Active SETI but that their concerns had been largely ignored by the rest of the community. Frustrated by what he perceived as a failure of Active SETI supporters to consult with their colleagues,5 Brin considered making the issue more public by contacting journalists with the story. Brin wrote that he preferred the “collegiate approach,” though, because public attention on the issue could damage SETI's reputation as a whole, and it’s unclear if he ever attempted to bring the story to journalists.

A permanent committee in the International Academy of Astronautics (IAA) is the closest thing to a regulatory body within the SETI community. In 2007, this committee drafted new principles regarding sending messages to Extraterrestrials, including that the decision of whether to do so “should be made by an appropriate international body, broadly representative of Humankind.” However, these principles were never adopted.

The debate over Active SETI was renewed in 2010. Stephen Hawking made headlines by saying that it was a bad idea. At a two-day conference hosted by the Royal Society, members of the SETI community had a heated debate but did not reach a consensus. At its annual meeting, the IAA SETI committee updated its Declaration of Principles for the first time in over 20 years, but the updated principles still made no mention of Active SETI.6

In 2015, the community debated the issue again at another conference with no resolution. That same year, 28 scientists and business leaders, including Elon Musk, signed a statement calling for “a worldwide scientific, political and humanitarian discussion” before continuing Active SETI, with an emphasis on the uncertainties that surround the existence, capabilities, and intentions of potential extraterrestrial intelligence.

The debate about whether the risks of Active SETI are acceptable appears to still be unresolved, and it’s unclear if the two sides of the debate are still discussing it with each other. The most recent transmission listed on the Active SETI Wikipedia page was sent in 2017.

1951-present: Computer scientists consider whether a sufficiently powerful misaligned AI system will escape containment and end life on Earth.

Concern about the impact of powerful AI systems dates back to the beginning of modern computer science:

Let us now assume, for the sake of argument, that [intelligent] machines are a genuine possibility, and look at the consequences of constructing them... There would be no question of the machines dying, and they would be able to converse with each other to sharpen their wits. At some stage therefore we should have to expect the machines to take control, in the way that is mentioned in Samuel Butler's Erewhon.

-Foundational computer scientist Alan Turing in 1951.

Today, many experts are concerned about the risk that there may eventually be an AI system that is much more capable than humans with goals that are not aligned with those of humans. When pursuing its goals, such a system might cause human extinction through its efforts to acquire resources or survive.

Unlike the scientists at the Manhattan Project or Brookhaven National Laboratory, AI researchers have no agreed-upon method for calculating the amount of extinction risk. Theoretical understanding of the nature of intelligence does not yet have the strong foundation seen in fields such as nuclear physics. Empirical data is limited because no human has ever interacted with an intelligence more capable than all humans.

Expert opinions vary widely about the amount of risk posed by powerful AI systems:

Decision theorist Eliezer Yudkowsky argues that, with our current techniques, a powerful AI would be “roughly certain to kill everybody.”

Former OpenAI researcher Paul Christiano believes that the total risk of extinction from AI is 10-20%.

Two researchers who describe themselves as AI optimists argue that “a catastrophic AI takeover is roughly 1% likely.”

In a recent survey of AI researchers, the median researcher gave a 5-10% chance7 of catastrophic outcomes such as human extinction.

Although it is common to see estimates of the extinction risk from AI, there seem to be few attempts to set an acceptable upper limit for it. Theoretical computer scientist Scott Aaronson wrote that his limit “might be as high as” 2% if the upside would be that we “learn the answers to all of humanity’s greatest questions.” Even without taking future lives into account, a 2% extinction risk is equivalent to around 160 million casualties in expectation, roughly four times the population of Canada.8 It’s difficult to say whether the potential benefits of powerful AI systems would justify taking that relatively high risk.

Citing the scientific uncertainty about the future outcomes of AI development, an open letter from earlier this year called for a moratorium on frontier AI development. The letter was signed by some prominent experts, including Yoshua Bengio, a Turing Prize winner, and Stuart Russell, co-author of the standard textbook “Artificial Intelligence: a Modern Approach.” As of this writing, no moratorium seems to have taken place– just two weeks ago, Google announced the release of its “largest and most capable AI model.”

Although discussions about extinction risks from AI have historically been rare outside of a relatively small research community, many policymakers, journalists, and members of the public have recently become more involved. Last month, the AI Safety Summit in the UK brought experts and world leaders together to discuss risks from AI and how to mitigate them.

Takeaways

Scientists sometimes disagree about the acceptable upper limit for extinction risks but usually agree that it should be extremely small.

For three cases, I found examples of scientists suggesting an acceptable limit for risk of extinction:

A Manhattan Project scientist set his upper limit at 3x10-6 (three in a million).

Scientists at CERN considered a 2x10-8 (two in a hundred million) risk to be acceptable for RHIC, but another physicist argued that a more appropriate limit would be 10-15 (one in a quadrillion) or 10-22 (one in ten billion trillion).

One prominent computer scientist might accept as much as a 2x10-2 (two in a hundred) chance of extinction from powerful AI.

Although these upper limits vary widely, they all fall below the 5x10-2 (five in a hundred) to 10-1 (one in ten) odds the median expert gave for extinction from AI last year, and usually by many orders of magnitude. Notably, this is true even about the upper limit given by a scientist who reasonably believed that stopping his research might lead to Nazis ruling the world.

When there is significant uncertainty about the risks, it is common to request a moratorium.

In each of the three cases where there was no clear consensus about the amount of risk, some portion of the scientific community called for a moratorium to discuss the issue and collect more evidence:

In the case of recombinant DNA, the moratorium was adhered to by the entire community despite disagreements. A conference established safe guidelines for some research and a prohibition on the most dangerous types of research. Over time, these guidelines were relaxed as recombinant DNA was shown to be mostly safe.

In the case of Active SETI, concerned community members who called for a moratorium and conference were initially dismissed. Later, there were conferences to discuss the issue, but the community never agreed on a set of guidelines.

In the case of frontier AI systems, only in the last few months has a moratorium been earnestly requested. No moratorium has yet occurred, but there is a growing discussion among researchers, policymakers, and the public about the potential risks and policies to address them.

By default, scientists prefer keeping discussions of extinction risk within their community.

All five cases contain some degree of interaction between the scientific community and policymakers or the public:

During the Manhattan Project, there was no public knowledge of the risk of atmospheric ignition, but the situation involved interaction between scientists and government officials. One of the scientists complained that the risk “somehow got into a document that went to Washington. So every once in a while after that, someone happened to notice it, and then back down the ladder came the question, and the thing never was laid to rest.”

At the Asilomar Conference on Recombinant DNA, 12 journalists were invited, but possibly only out of a fear that the scientists would be accused of a cover-up otherwise.

Risk assessments for large-ion collider experiments were published only a year before RHIC began to operate, and seemingly only because of unexpected media attention.

A concerned member of the SETI community considered bringing the issue of Active SETI to journalists but said that he preferred to discuss the issue within the community.

Discussion of risks from AI has recently grown among policymakers and the public. Previously, there was relatively little discussion of the risks outside the research community.

Arthur Compton died in 1962, three years after the article was published in The American Weekly. He was delivering lectures in the month before his death.

The other two risks addressed by the paper are gravitational singularities and vacuum instability. The authors use a theoretical argument to show that gravitational singularities are very unlikely but do not estimate a bound on the probability. For the vacuum instability scenario, they cite earlier work which argues that cosmic ray collisions have occurred many times in Earth’s past lightcone, and the fact that we exist is empirical evidence that such collisions are safe. “On empirical grounds alone, the probability of a vacuum transition at RHIC is bounded by 2×10−36.” However, I am personally skeptical of this argument because it seems to ignore the effect of anthropic shadow.

Kent’s estimate of future lives is highly conservative compared to some other estimates- his calculation assumes only that the human population will hold constant at 10 billion until the earth is consumed by the sun.

Kent said that “future policy on catastrophe risks would be more rational, and more deserving of public trust, if acceptable risk bounds were generally agreed ahead of time and if serious research on whether those bounds could indeed be guaranteed was carried out well in advance of any hypothetically risky experiment, with the relevant debates involving experts with no stake in the experiments under consideration.”

“...this would seem to be one more example of small groups blithely assuming that they know better. Better than the masses. Better than sovereign institutions. Better than all of their colleagues and peers. So much better — with perfect and serene confidence — that they are willing to bet all of human posterity upon their correct set of assumptions.” -David Brin

Although the declaration says nothing about actively sending messages, it does contain a principle about responding to messages: “In the case of the confirmed detection of a signal, signatories to this declaration will not respond without first seeking guidance and consent of a broadly representative international body, such as the United Nations.”

The median response is represented here as a range because it varied based on question framing.

If we do take future lives into account, it might be equivalent to expected casualties ranging from 200 billion to 2x1052 (more than the number of atoms on earth).

Great article! Another possible case study: The apollo mission IIRC had a bio-quarantine procedure for the astronauts, in case they had contracted some sort of contagious moon disease. I don't know much about the story and am unsure why they took this concern seriously, but they seem to have.

Another possible case study would be scientists and engineers and military types building the nuclear arsenals and MAD systems and so forth being concerned that global nuclear war could be triggered by accident (or on purpose for that matter).

And then there's some stuff in synthetic bio...

This was a great read. The recombinant DNA story reminded me of a similar, recent example from biology: the invention of CRISPR. Jennifer Doudna, the technology's inventor, realized that her technology could be used for evil - she had a dream about Adolf Hitler using her invention.

Afterwards, she publicly called for a halt to human gene editing and met with peers to discuss CRISPR's dangers. Eventually, that led to the 2015 International Summit on Human Gene Editing, which decided on a self-imposed gene editing moratorium.